Orchestrate request for server-side execution of Turing machine with XState

Published on

We built a Turing machine visualizer but it does not execute machines itself—it's the job of an Erlang server. The visualizer needs to make a request to execute machines, and XState is the perfect tool to orchestrate this in combination with other behaviors of the visualizer.

This article is the second one of a series about our Turing machine visualizer built with XState .

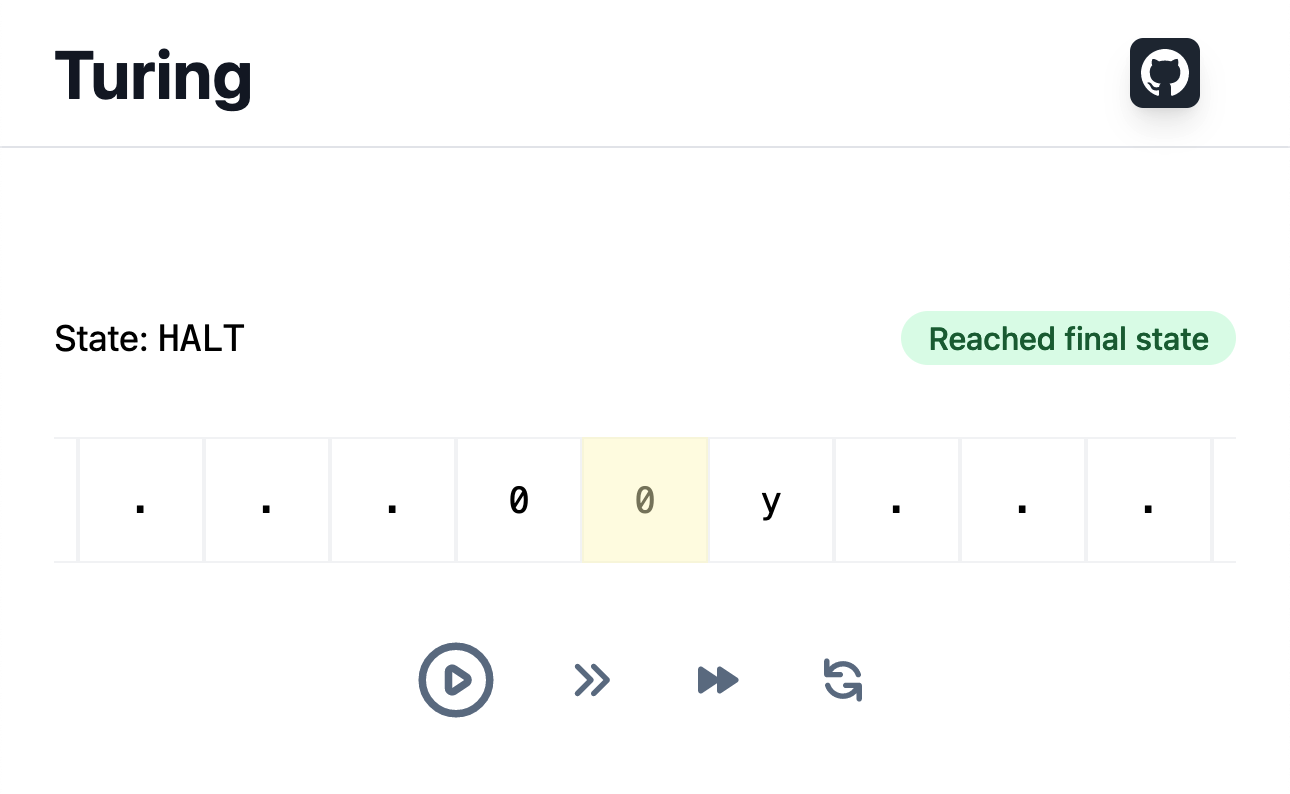

With Paul Rastoin we built a Turing machine interpreter and a web visualizer to execute Turing machines easily. Here the visualizer has been used to determine if input is an even sequence of 0s (y means that yes it is!):

The visualizer does not execute Turing machines, it sends to the remote interpreter the definition of the machine and the input to execute. Then, if the machine could be executed, the server returns all the steps of execution, that are then displayed in the visualizer.

The challenge is to combine data fetching with the control of the visualizer itself, implemented in the previous article. Challenge accepted!

permalinkSynchronize input and machine configuration values with machine

We want the machine to always hold the most recent values of the input and machine configuration.

The machine listens to changes on input and machine configuration values, and updates its context:

ts

On Vue side, the value of inputs must reflect the values in the context, and when inputs get changed, they send events to the machine:

svelte

permalinkSend input and machine configuration to server for execution

We wrote an Erlang server to compute Turing machines with an input. It expects the input and the machine configuration to be sent as JSON in a POST request. When clicking on Load button (not shown in GIF), we want to make this request.

A first implementation can be to create a sibling state to Playing steps, where we wait for a Load event to make the request:

ts

Playing steps is the state that manages the controls of the visualizer, like starting and stopping the visualizer, increasing the speed, etc. Implementation of this state has been detailed in previous article.

Execute machine and input on server is a Promise service. When the promise resolves, onDone transition is taken, otherwise, if the promise rejects, it’s onError transition.

In this implementation, Playing steps and Making request to server states are mutually exclusive, that is, they can not be active at the same time—this is the main characteristic of a finite state machine, that can only be in one state at a time. When making a request to the server to compute a new execution, user can no longer interact with the visualizer, as it can only in Playing steps state.

This may be the behavior you want to implement, and indeed this is what we wanted. But things get harder when we want to deal with error cases for the request.

permalinkTarget a state when error occurs

If execution fails, we want to display an alert telling the user that an error occurred and that the visualizer does not show the latest execution, but the last one that succeeded. We need to store in the machine, somewhere, the state of the last execution.

We can target an error state when execution fails, and after use state.matches() to test whether this state is active:

ts

But now, if an error occurred during the last execution, we can no longer interact with the visualizer, which is only interactive when Playing steps state is active. As a consequence, we need to find another way to store that an error occurred.

permalinkTrack error with a property in context

Another idiomatic way to solve the issue is to store another property into the context:

ts

There is more work to do, as we need to reset the property before making another request to ensure it remains valid, but this works well and is definitely okay.

What I dislike with this solution though is that request to server is too tightly coupled with visualizer management. If tomorrow we want to allow users to perform some actions while request is pending, we would need to substantially change the machine. This is because the way we implemented it, no other action can be performed simultaneously with the request to server. We can preemptively prepare the machine for this.

We had a hard time figuring out how to insert request to server in visualizer management flow because these both parts are interleaved: they are neither totally independent nor totally mutually exclusive.

There are two tools in XState to implement interleaved behaviors: parallel states and services (especially machine services). Choosing between one or the other really depends on the context of use, but here I think parallel states are more suitable as both behaviors are more coupled than independent, and they would benefit from being written in the same machine. For your information, in the last article of this series we are going to use a machine service 👀.

permalinkDecouple request to server from visualizer management

We refactor the machine to use a parallel state:

ts

Contrary to a compound state, which can only have one of its child states active at the same time, a parallel state has all its child states active at the same time. The machine is in several states at the same time, and state.matches() returns true for several values. Children of a parallel state are called orthogonal regions, in that they are independent.

An important feature of parallel states is that all active regions receive events at the same time, and can handle them separately. Here, when receiving a Load event, Managing input and machine execution state goes to its child state Making request to server that starts the request.

The previous implementation that did not use parallel states was preventing users from interacting with the visualizer while a request was performing, and we want to keep this behavior. We must update our implementation.

First, we disable the visualizer when loading starts, and we ensure visualizer is only available after a machine has been executed:

ts

When machine starts or when an execution starts, Idle state is entered. Note that Load state is caught in both orthogonal regions, and leads to different actions in both regions.

Now we need to enter in Playing steps state when Turing machine execution ends, successfully or not. If the execution was unsuccessful, the user will visualize the last valid machine:

ts

If execution succeeds, we assign steps to context, and we enter Received response state. In case of error, we enter Failed to execute machine with input. These both states call Allow to play execution steps action when entering, that sends an Enable playing execution steps event to the machine itself.

Here we rely on a corollary of the fact that all active orthogonal regions of a statechart receive events: they also receive events sent by other orthogonal regions. It’s called broadcasting. In Managing visualizer execution.Idle state we wait for Enable playing execution steps event to go to Playing steps state. Wiring finished! 😎

We may think that this solution is overengineered and involves a lot more code to achieve the same result. And in a way it’s true, we wrote more code. But we wrote code more resilient to change. We made a clear distinction between different concerns.

If we want to know whether error alert should be shown, we can test whether machine is in Failed to execute machine with input state, without needing to maintain a boolean in context.

If in a week we want to allow users to interact with visualizer while a request is pending, all we would have to do is removing an event listener:

ts

As a lot of things in development, the best solution is context-dependent. Parallel states are perfect to allow a machine to orchestrate more than one thing at a time, but this can lead to synchronization issues and less understandable code. I talked about this problem in a YouTube video.

permalinkSummary

In this article we used the context of a machine as the source of truth for inputs and established a two-way binding.

We made HTTP requests by the mean of services, started when the state they are defined on is entered. There are different ways to keep track of error state, and we experienced two of them, with a preference for state-based one. 👀

Seeing that making requests and managing visualizer were not able to sit on the same chair at the same time, we decoupled these behaviors with a parallel state. We used tools brought by parallel states to synchronize orthogonal regions with each other, like broadcasting. That made the code more resilient to change.

So that was the second article of the series about Turing machine visualizer showcase! Next articles will be released soon.

In the next chapter we are going to talk about the loading animation of the visualizer.

The code of the visualizer is on GitHub.

The series contains the following articles:

- Control tape of Turing machine visualizer with XState

- Orchestrate request for server-side execution of Turing machine with XState

- Prevent flickering loading animation with XState

- Create stale data indicator with XState

- Create a proxy state machine to drive CSS transitions on state changes with XState